Continuous Delivery of this blog

For some time I'm using Jekyll which gave the opportunity to automate some things for this blog, I went as further I could in creating a Continuous Delivery environment for it.

Tooling, stages and more

In order to make this Continuous Delivery environment I had to make sure that I had some tools and stages defined, here's a short description of these.

Version Control

I have a private repository on github for all the code and posts, so whatever automation that I will put in place it has to integrate with it.

Build and Deploy tool

Rake is my tool of choice for compiling and deploying the blog, actually rake today is my tool of choice for building almost anything, the tasks and the fact you can write plain Ruby in it is enough for most of automation that I've been doing.

Compiling

Jekyll is a static blog generator which pretty much is the same to say that it reads your files and create a static website. That's my definition of compilation for this blog, whenever I use compilation I'm referring to the execution of:

jekyll --pygments

Testing

When I've mentioned that I was doing this kind of automation to my colleagues one of the first questions was "What are you testing it for?" Definitely a fair question. All this automation without something to test would feel like doing a Goldberg machine for a simple copy of static files.

What I've found later was rather interesting, first I didn't have tests but as soon as the deployment was working I found it that I had the perfect environment for doing all kind of tests.

Currently I test the blog for two things:

- All internal links point to a valid resource.

- No spurious CSS classes are left around cluttering without being used.

Packaging

How do we package a static website? My answer to the packaging was as simple as

you might guess, tar.gz. This is the simplest packaging you can have around

and works pretty well on Unix based systems and with a small website it doesn't

hurt to do a replacement of all files every time I deploy. There's a catch

though; you have to clean up the destination, otherwise files that got removed

will hang on the directory indefinitely.

Staging environment

Before going to production I setted up a staging environment, basically this environment plays two roles on the pipeline. First it's where I can run some tests with the website up. Second, it allows me to do a proof reading of what is about to get published.

Continuous Integration / Agile Release Management

I'm using ThoughtWorks GO to coordinate all steps of testing and deploying.

The pipeline

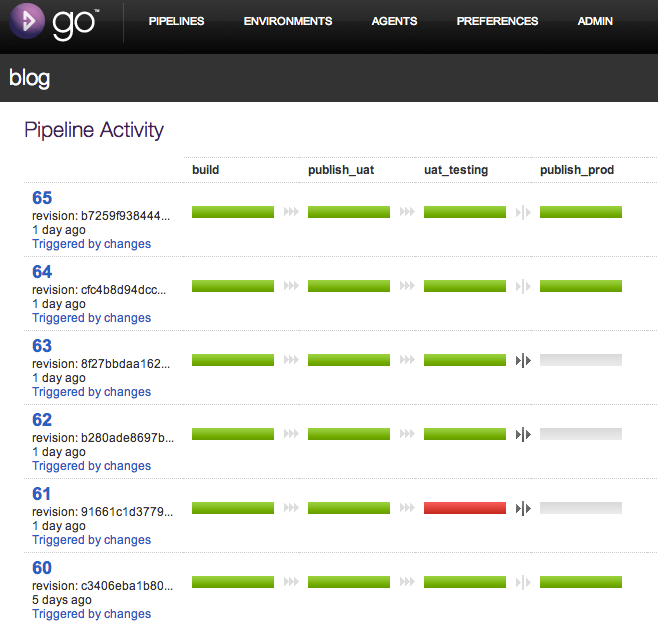

Here's a screen shot of couple last runs of my blog.

There's nothing fancy about it, it compiles and that has a couple of steps including packaging, publishes to UAT (User Acceptance Tests) environment, the internal links tests happens and than there's the last stage which is manually triggered by me to publish to production.

Please note that not every build got published to production, often times when I'm proof reading or working on the site plumbing I find a problem that I didn't catch on my local environment.

Another thing that might caught your attention is a broken build. That specific

one happened when I've added the jquery library I didn't use a

absolute path on the src of the script tag, the issue was discovered by the

checklinks on pages that were in directories and unable to find jquery.js.

Build / Compile stage

More than just run jekyll happens on build stage, here's all the tasks

currently executed by Go:

bundle install --path vendor/bundlebundle exec rake buildbundle exec rake archivebundle exec rake send_pkg

The first task is just installing jekyll and rake and it's dependencies,

using bundler gives me the option of updating jekyll without having to worry if

the CI's jekyll is updated too, plus, is the update brakes the website and I

don't catch the problem locally it will be caught by the CI.

When we invoke rake build here's what happens:

task :build => [:compile, :check_css_redundancy]

task :compile => [:pygments_is_installed] do

run_or_show "jekyll --pygments"

end

task :check_css_redundancy => [:compile] do

run_or_show "bash -c \"_bin/css-redundancy-checker.rb _site/css/style.css <(find _site -name '*.html' -type f)\" "

end

css-redundancy-checker.rb

will do the work of checking is there's any CSS leftover on the site. I've

tweaked a little bit to fit the automation specially to give an exit different

of 0 in case it finds something, that causes Go to fail the task.

If all goes well we arrive at the archive task, which is this one.

task :archive => [:version_info] do

FileUtils.mkdir ARCHIVE_DIR if !Dir.exists?(ARCHIVE_DIR)

run_or_show "tar -zcf #{ARCHIVE_DIR}/#{pkg_name} -C _site ."

end

def pkg_name

label=ENV['GO_PIPELINE_LABEL']

fail "Can't do much without environment variable GO_PIPELINE_LABEL" if label.nil?

"blog-#{label}.tar.gz"

end

As I mentioned earlier packaging of the site is nothing more than a tar.gz of

the files compiled by jekyll. The GO_PIPELINE_LABEL environment variable is

used so that I have different packages for each build. The result of this task

is files like these:

- blog-63.tar.gz

- blog-64.tar.gz

- blog-65.tar.gz

And after the file is packaged it is send to the server with scp.

task :send_pkg do

run_or_show "scp -v #{ARCHIVE_DIR}/#{pkg_name} #{AT_USER + HOST}:tmp/"

end

Here AT_USER is my artifacts user which has an account on my server just to

host the packages. You may think about it as a repository similar to Yum or Apt

for the my artifacts just a much simpler one. This is the end of the build /

compile stage.

Publish to User Acceptance Test environment (UAT)

The next stage is publishing the site to the UAT environment, this is accomplished by this rake task:

task :publish, :env do |t, args|

env = valid_env_parameter args

run_or_show "ssh -v #{user env} ' [ -f ~#{AT_USER}/tmp/#{pkg_name} ] " \

" && rm -Rf #{target_dir env}/* " \

" && tar -zxf ~#{AT_USER}/tmp/#{pkg_name} -C #{target_dir env}' "

end

Which is a fancy way to just execute a shell command on the server. It first

checks if the package for the version of the blog we are about to deploy is in

the repository (remember if just a directory on the server). Than it follows by

removing all the files and uncompressing the package. This is the same task used

for UAT and production, it just differ by a parameter given to rake, which

changes the host and the user to be used.

UAT Testing

In this stage currently we run just the

checklink

tests, here's the task that triggers it:

task :checklinks, [:env] => [:checklinks_is_installed] do |t, args|

domain = target_dir valid_env_parameter args

run_or_show "_bin/checklink_wrapper.sh #{domain}"

end

In order to make checklink useful on the pipeline I coded a simple shell wrapper that does the job of failing the task if checklink finds a problem, also in order to test only internal links and changing the domain to which checklinks runs I had to add one regular expression filter on it. Checklink is good but it's not so easy to understand how to use the parameters it has. Here's the wrapper:

#!/bin/bash

set -e

i=0;

while read line

do

[[ $line =~ 'Code:' ]] && i=$(($i+1));

echo $line;

done < <(checklink -r -b -X "^((?!$1).)*$" http://$1)

exit $i

This script will exit with the number of links that were broken, again, if the number is different than zero it will break the task and thus the build.

Publishing to production

This is the same as publishing to UAT, it's the same deployment script and it changes only the user and host where the site will be published. This is a manual triggered stage in Go, when I'm confident that I want to release I push the button and it goes.

Notes and Observations

Let's discuss some principles of Continuous Delivery and how these relate with what I did.

One package, one binary

You will note that the packing/archiving of jekyll compiled files is done only once. As mentioned in the Continuous Delivery book, you want to compile only once, test and deploy this "binary" as much as will feel like but not recompiling it. Why? Because if you recompile it who guarantees you didn't change something from one compilation to another? When we create a binary or a package we want that this package prove that is production worthy, it has to face all challenges we can think of. If it survives, it's production worthy.

Rolling back / Rolling forward

Another important factor on a Continuous Delivery environment is being able to revert your changes. With the current pipeline and deployment scripts I can release whatever version of the blog I want just with a click of a button. In fact I did it just now for the fun of it and reverted the blog to a month old version, it worked.

In this particular environment there's not actual rollback, it's just roll forward, the only thing that is needed is the deployment of an older version.

Extend your pipeline to production, do it as earlier as possible

When I decided to move my blog to CD I had another publishing routing, it was

simpler but still bases on the triggers of version control. In short, it ran

jekyll as soon as I pushed to the repository.

Moving to a proper pipeline and automation changed a lot of things; first I

stopped running jekyll on the version control server, which as I already

guessed was the same as the web server. All of this stack was pilled up on one

server, now there's a better organization of the roles of each server.

I had to go through a lot back and forwards to design an architecture of deployment to suit my scenario. A big challenge and one that I would see only when automating to production was security. Currently I use DreamHost to host my blog and for all my users I use enhanced security, maintaining security and still being able to perform automated deployments required some thought and some different strategies that I first thought.

This is why extending your pipeline to production is not something to be left behind. It will pose to you different challenges from running your application on your local machine, it will pose security questions, third party integration, cleaning up the file system, making sure that if a deployment fails it will not leave your application in an invalid state; and the list goes on.

Keeping a version number

Another thing that I did just for the fun of it was add a version number to the

blog. If you access this url you can check

what is the current build number of the website, the version control revision

and the date of compilation. I don't have any practical usages for this

information, not by now anyways. Here's the task that generates the

version.txt file:

task :version_info do

File.open('_site/version.txt','w') do |f|

f.write "package: #{pkg_name}\n"

f.write "date: #{Time.now}\n"

f.write "revision: #{ENV['GO_REVISION']}\n"

end

end

That's it.